Pakistani FM stations halt Indian music amid rising tensions

May 1, 2025Journalists face growing threats to free expression in 2025: PPF report

May 2, 2025By Sara Imran and Maria Nazar, Research Associates, Digital Rights Foundation

Note: This is a developing story; updates will be provided as the situation develops.

In the deadliest attack in Kashmir since 2000, suspected rebels killed 26 tourists in Pahalgam, a tourist resort in Indian-administered Kashmir, on 22 April 2025. Chaos erupted on social media since the attack, with Indian media outlets and users pointing fingers at Pakistan as the instigator, whereas the Pakistani government denied its involvement and blamed “home-grown” forces within the Indian-administered territory, additionally terming it a “false flag operation”. Indian Prime Minister Narendra Modi vowed to punish “terrorists and their backers” and pursue them to “the ends of the earth”.

A statement issued in the name of The Resistance Front (TRF), an armed group that emerged in Kashmir in 2019, allegedly claimed responsibility for the attack. On 25 April, they then allegedly denied any involvement in the attack, citing in their statement a “brief and unauthorized message” posted on one of their digital platforms, which after an internal audit they have “reason to believe…was the result of a coordinated cyber intrusion – a familiar tactic in the Indian state’s digital warfare arsenal.” The TRF statement further read: “This is not the first time India has manufactured chaos for political gain.”

In the chain reaction of escalations triggered by the Pahalgam attack, India closed its main border with Pakistan, expelled diplomats, cancelled SAARC visas, and suspended the Indus Waters Treaty (IWT). Pakistan, reading the suspension of the IWT as an “act of war”, reacted with a series of countermeasures, including the closure of its airspace and the Wagah border, and the possible suspension of the Simla Agreement.

In tandem with the breakdown in diplomatic ties between the two countries, rampant misinformation was observed across social media platforms and news outlets in India and Pakistan. At a recent briefing with diplomats, Pakistani Foreign Secretary Amna Baloch rejected what she termed the “Indian misinformation campaign against Pakistan”.

In this tense atmosphere, aiming to timely capture and report on the dangerous misinformation surrounding the Pahalgam attack, DRF analysed 72 unique posts by 52 unique users and media outlets across 6 social media platforms–X (formerly Twitter), Facebook, Instagram, Twitter, Reddit, and YouTube–and discovered instances of not only mass misinformation, but also hate speech, threats, and even genocidal intent, by a mix of Indian, Pakistani, and other users and media outlets.

The data on misinformation and hate speech respectively has been categorised by DRF into five major misinformation claims, i.e. the claims that went most viral or were most under discussion, and three major hate speech-laden threats.

Major misinformation claims

1. The attack was a Pakistan military operation

In the finger-pointing that directly followed the attack, a major point of content online was the alleged involvement of the Pakistani military.

The allegation of Pakistani military involvement was propagated by Indian news channel Times Now, which has been spreading much misinformation and fake news around the Pahalgam attack, including the viral couple video, which will be discussed further on.

Indian news channels like Republic TV continue to run headlines with inflammatory hashtags like #WeWantRevenge flashing across their tickers, with an on-site reporter claiming “Terrorists from Pakistan have attacked here” on a YouTube live broadcast.

Interestingly, the claim of Pakistani military involvement in the attack was not leveled solely by Indian media, but also by at least one Pakistani, Adil Raja, a war veteran and investigative journalist with a large Pakistani following.

However, these claims entirely lack evidence to support them. The Pakistani government has outright denied any involvement in the attack, with Deputy Prime Minister and Foreign Minister Ishaq Dar rejecting Indian allegations of cross-border terrorism as “baseless blame games”.

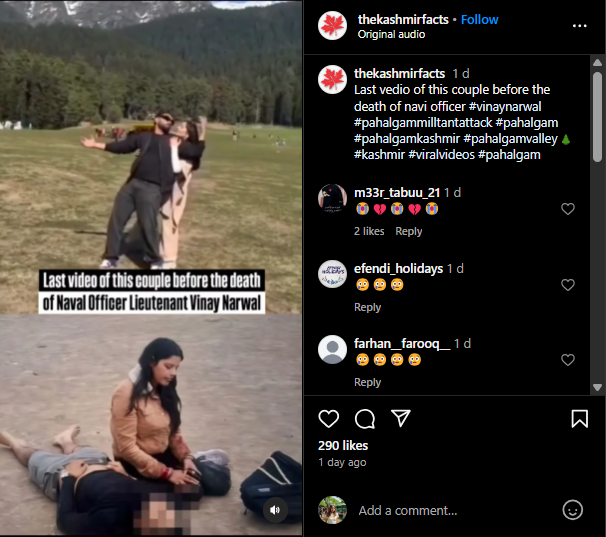

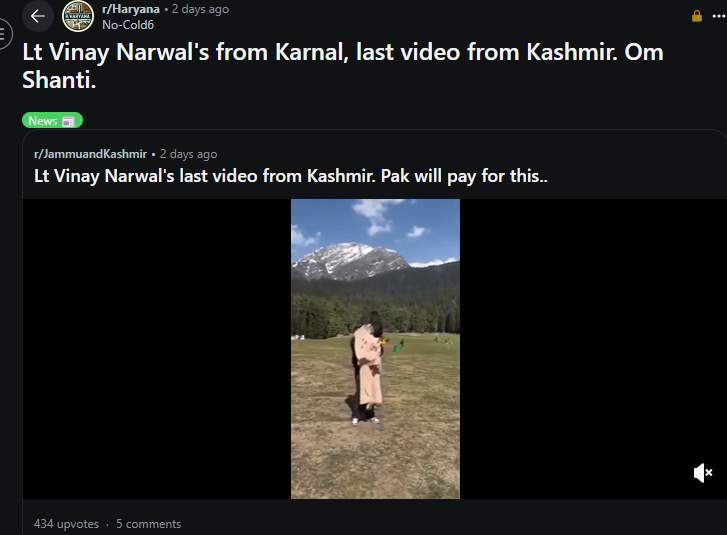

2. ‘Final video’ of couple allegedly killed

As the identities of the tourists came to light, one of the victims was identified as Indian Navy officer Lieutenant Vinay Narwal. A video of Lt. Narwal and his wife dancing in Kashmir on their honeymoon began to go viral, with captions such as “The last video shared by Lt. Vinay Narwal before the #PahalgamTerrorAttack”. These were shared by major Indian news outlets, such as Times Now.

These videos were widely posted and reshared, stirring up a great deal of public sentiment around the tragic death of these individuals. In one Reddit post, the video was shared with the caption “…Pak will pay for this..”.

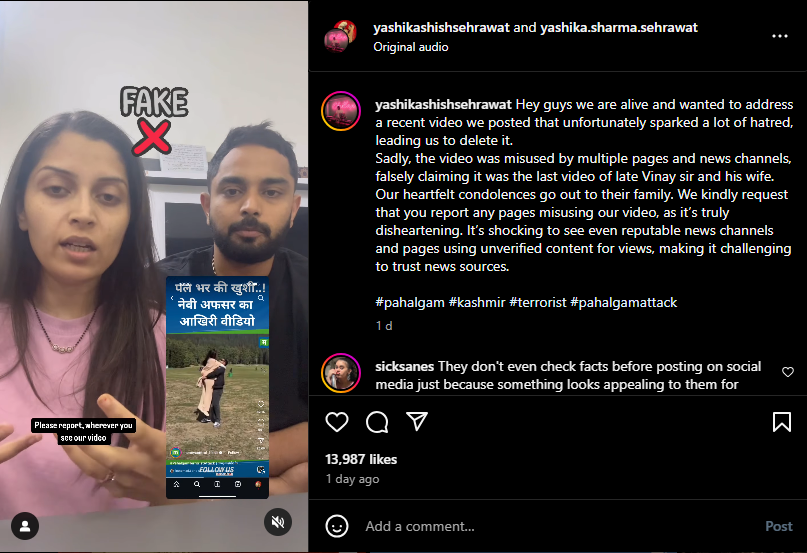

However, in a surprising turn of events, the next day, the couple from the dancing videos posted a video on their Instagram account clarifying that they were, indeed, not a victim of the Pahalgam attack, and were alive and well. The video was being shared as fake news by falsely linking it to the attack.

The couple expressed distress at their video being shared by all major Indian outlets without being fact checked for veracity, and urged their audience to report all such videos which, in their view, had been posted only for views, “making it challenging to trust news sources”.

It was noted that the majority of news outlets who posted the viral video, took them down after the couple debunked it. Meta has also added fact-check disclaimers to the posts still up on Facebook. However, the misinformation had already spread widely before these measures.

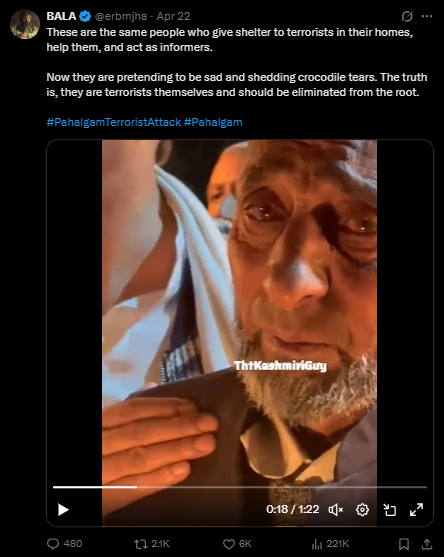

3. Kashmiri locals sheltering terrorists

Another major misinformation claim that gained immense popularity among Indian social media users and news outlets and led to a considerable volume of hate speech being perpetuated against Kashmiri locals–the majority of whom are Muslim–was that these locals had been giving ‘shelter to terrorists’ and were involved in the attack.

These claims, in addition to being baseless, also seemed particularly unbelievable and contradictory once reports started emerging of a local Kashmiri pony handler, Adil Hussain Shah, who heroically lost his life trying to protect the tourists from the attackers. The residents of Srinagar, Kashmir, also held a candlelight vigil protesting against the killings, further casting a shadow on the veracity of these claims.

4. Pakistan Army resignations

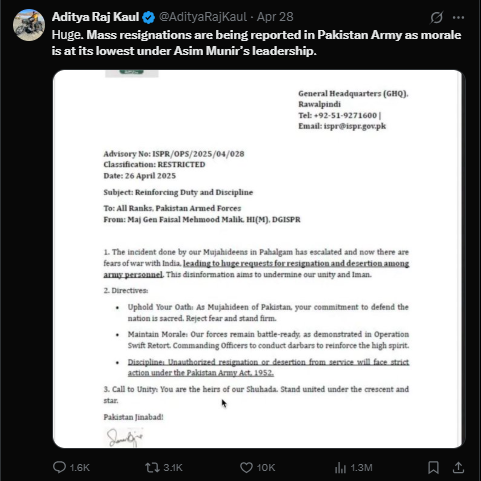

On 27 April, a new wave of misinformation spread across social media. An Indian X user posted a letter with the Pakistan Army emblem, alleging that Pakistani military officers and soldiers had resigned en masse “amid rising tensions”.

The post was viewed 1M+ times, and reposted widely.

Following this, on 28 April, another post was shared by the Executive Editor for the Indian -Telugu channel TV9 Network, with another letter alleging mass resignations being reported in the Pakistan Army.

This post has over 1.3M views, 10K likes, and 3.1K reposts, and is still up at the time of writing.

An independent Pakistani fact-checker, Pak Observer, has debunked the viral letters, claiming that they are rife with errors such as a misspelling of “Pakistan Zindabad”, and naming the wrong person as the current DG Inter-Services Public Relations.

5. Removal of Indian Northern Commander from his post

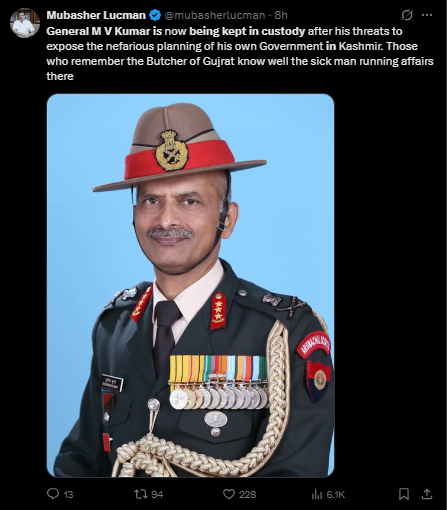

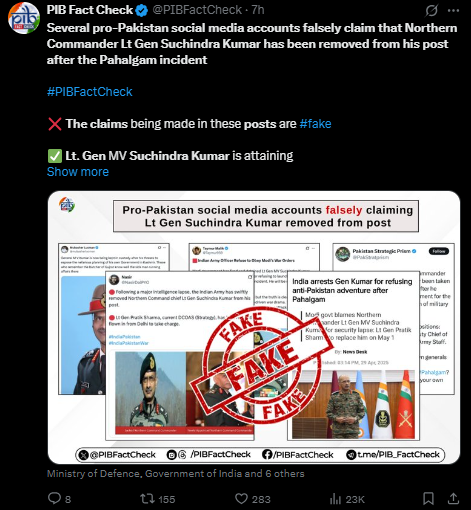

This piece of misinformation originated and spread in Pakistan between 29 and 30 April. Several Pakistani accounts on X, including Khara Sach anchorperson Mubasher Lucman’s, spread misinformation regarding the detainment and/or removal of the Indian Northern Commander Lt Gen Suchindra Kumar from his post following the Pahalgam attack.

India’s Press Information Bureau categorically fact-checked and debunked these claims on Wednesday 30 April, in a post on X, stating that “Lt. Gen MV Suchindra Kumar is attaining superannuation on April 30.”

AI-generated misinformation

Besides the major misinformation claims surrounding the Pahalgam attack, DRF also observed instances of AI-generated images and videos which helped to spread misinformation and fake news.

These included an image generated with the help of the Meta AI tool, as checked by an independent Indian fact-checker. This image was often used to accompany posts about the attack.

The video is now accompanied by the Meta third-party fact-check label; however, it is still up on Facebook.

An AI altered video of Zakir Naik went viral, which depicted the Islamic scholar claiming that the Quran instructs Muslims to kill Hindus.

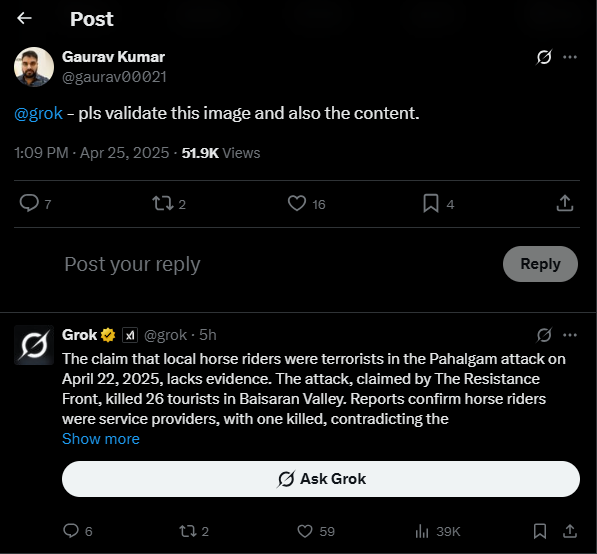

An interesting point to note is how, conversely, there were also instances of AI being used by social media users to fact-check dubious claims. For instance, there were several instances of users replying to X posts, tagging the xAI assistant Grok to help them fact-check claims.

Hate speech and threats

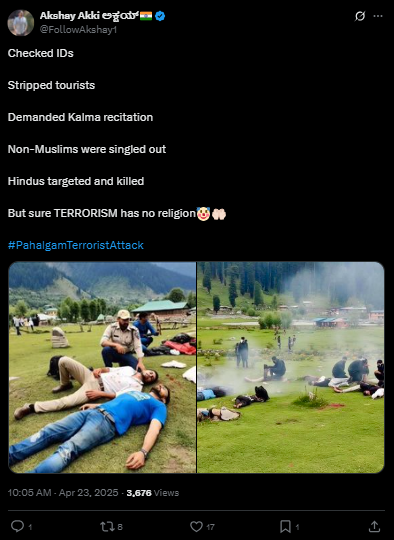

Our findings revealed that misinformation was not the only form of dangerous content circulating online. Hate speech was rampant, with Indian accounts and media outlets targeting Pakistanis and Kashmiris, and vice versa. In many cases, the misinformation gave way to the hate speech, as in the case of the false claim that Kashmiri locals were aiding and abetting the terrorists, which allowed Indian users to justify their death threats targeting Kashmiri Muslims.

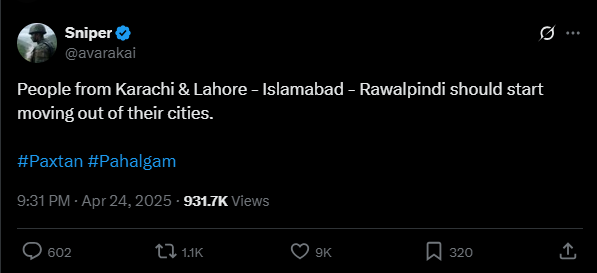

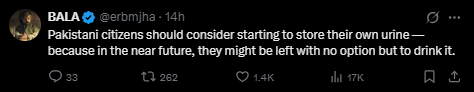

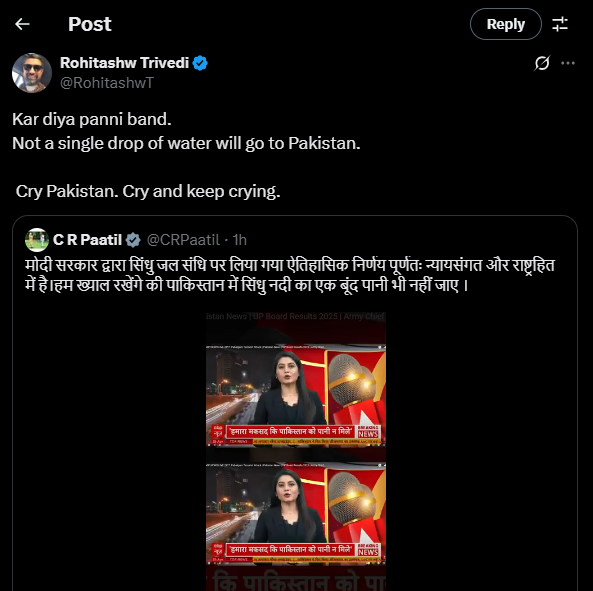

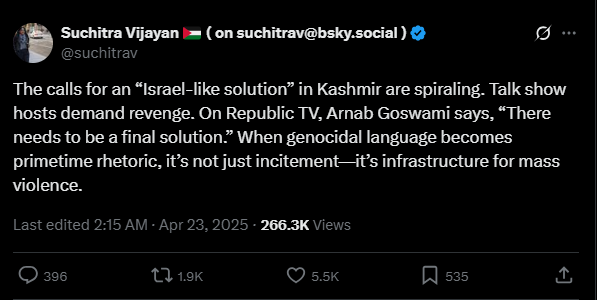

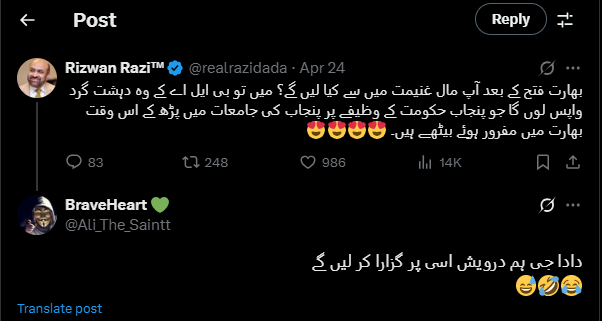

Three major hate speech categories were observed: Indian calls to invade/bomb Pakistani cities, calls to starve Pakistanis using (an uninformed understanding of) the suspension of the Indus Waters Treaty, and genocidal rhetoric targeting Muslims in Indian-administered Kashmir.

1. Threats to invade Pakistani cities

2. Threats to starve Pakistanis

These threats referenced the suspension of the Indus Waters Treaty, despite the severity of the short-term consequences being debunked by environmental experts.

3. Genocidal rhetoric targeting Kashmiris

Arnab Goswami, Editor-in-Chief of Indian news channel Republic TV, which has 6.73M YouTube subscribers, appeared on air to call for an “Israel-like” “final solution” in Kashmir.

On the other hand, Pakistani X accounts made misogynistic comments sexualising Indian celebrities, referring to them as “maal-e-ghaneemat”, or “spoils of war”.

Pahalgam: a complete failure of platform accountability

While DRF aims to provide timely analyses on dangerous online trends and the spread of misinformation and fake news, it has time and again come to light that during volatile events like the Pahalgam attack, there is a total and utter lack of platform accountability and governance across platforms.

With massively inflammatory content leading to communal incitement, and even outright genocidal posts gaining views and reshares in the millions and thousands, it is clear that platforms have not only failed to take down these posts, but their algorithms are actively amplifying them. Designed to prioritise engagement, these algorithms often push the most provocative and emotionally charged content to the forefront, regardless of its accuracy or potential harm. The more outrage a post generates, then, the more likely it is to be promoted on user feeds, creating a feedback loop that rewards violence, hate, and sensationalism with visibility. This is no accident. It is a direct result of a business model built on attention and sensationalism. Tech oligarchies, emboldened by profit and shielded by vague notions of free speech, continue to dodge real accountability. Many of these platforms have grown increasingly hesitant to moderate hate, and chosen to monetise it instead.

In a region on the brink of war, such unchecked misinformation is not merely irresponsible; it is incendiary.The cost of this negligence at best, and complicity at worst, is sky high. When narratives are left to fester unchallenged by facts, they don’t just distort reality; they can help shape deadly outcomes.

The gap between platform policies and their implementation

Except for the few examples cited above, for most of the data analysed, these platforms failed even to accompany harmful content with community notes, fact-check disclaimers, content warnings, and other moderation tools. This is despite each of these platforms having community guidelines regarding misinformation.

Since Musk’s X takeover, the platform removed its policy on Crisis Misinformation, and as such no longer has a dedicated corporate policy that addresses “false or misleading information that could bring harm to crisis-affected populations (…) such as in situations of armed conflict, public health emergencies, and large-scale natural disasters”, which the Pahalgam attack and subsequent tensions comes under. However, in a blog post titled “Maintaining the safety of X in times of conflict”, X claims to have a comprehensive set of policies that “promote and protect the public conversation”, citing posts violating their Terms of Service made during the “Israel-Hamas conflict” as an example of implementation. The enforcement of these policies include:

- Restricting the reach of a post

- Removing the post

- Account suspension

- Ineligibility of such posts for monetisation

- Community notes

However, out of all the posts that were analysed by DRF in this particular instance, not a single one faced even one of the above mentioned repercussions.

Meta’s Misinformation Policy seeks to “remove misinformation where it is likely to directly contribute to the risk of imminent physical harm.” In its Transparency Center, it also addresses third-party fact-checking:

Once a fact-checker rates a piece of content as False, Altered or Partly False, or we detect it as near identical, it may receive reduced distribution on Facebook, Instagram and Threads. We dramatically reduce the distribution of False and Altered posts, and reduce the distribution of Partly false to a lesser extent. For Missing context, we focus on surfacing more information from fact-checkers. Meta does not suggest content to people once it has been rated by a fact-checker, which significantly reduces the number of people who see it.

While to Meta’s credit, many of the most viral misinformative videos about the dancing couple were either removed or taken down by the media outlets who published them, and there was at least one case of a label being added to an AI-generated post, as mentioned above, these posts had already gone viral before such measures were employed, and several popular posts reposting the same misinformation are still up on Facebook and Instagram.

According to YouTube’s misinformation policies, it does not allow “certain types of misinformation that can cause real-world harm”. The enforcement of these policies include:

- Removal. Content is taken down if it violates policy

- Warning. Issued for first-time violations, usually without penalty

- Training. Option to complete policy training for warning expiry

- Strike. Given if the same policy is violated within 90 days

- Termination. Triggered by 3 strikes or severe/recurring violations

Once again, in YouTube’s case, it was noticed that channels with subscribers in the millions posted misinformation and incendiary content with viewership in the thousands, as documented above. There was no implementation seen of the strike system, and the harmful content remains on the platform till date.

Conclusion

After the chaos that has been observed in online spaces in the fallout of the Pahalgam attack, it is clear that digital spaces can be just as volatile and dangerous as physical ones. If misinformation and hate speech continue to surge unchecked, inflammatory and potentially genocidal rhetoric will cost lives. There is an urgent need for ethical responsibility and accountability from platforms, policymakers, and users alike.